Introduction to Machine Learning

Machine learning stands at the intersection of computer science and statistical analysis, creating a powerful tool that gives computers the ability to learn from data. This capability is a subset of artificial intelligence, which focuses on the development of algorithms that can process vast amounts of information, recognize patterns, and make data-driven decisions without traditional programming paradigms. As an encompassing field, machine learning has its conceptual roots in the mid-20th century, with pivotal contributions from researchers like Alan Turing and Arthur Samuel, the latter often credited with coining the term “machine learning” in 1959. Samuel’s pioneering work on self-learning checkers programs laid the groundwork for what would become an expansive and transformative discipline.

The significance of machine learning in today’s world cannot be overstated. Its techniques are foundational to a myriad of applications, driving advancements in diverse fields such as healthcare, finance, retail, and technology. For instance, medical diagnostic tools now incorporate machine learning algorithms to better predict patient outcomes and personalize treatment plans. Similarly, in the financial sector, these algorithms power high-frequency trading systems, detect fraudulent activities, and assess credit risks with unprecedented accuracy. Retail giants employ machine learning to optimize supply chains, personalize shopping experiences, and launch targeted marketing campaigns, while technology companies leverage it in speech recognition, image processing, and natural language processing applications.

At its core, machine learning relies on three primary methodologies: supervised learning, unsupervised learning, and reinforcement learning. Supervised learning involves training algorithms on labeled datasets to predict outcomes based on input data. Unsupervised learning, on the other hand, deals with identifying patterns and relationships in data without prior labels. Reinforcement learning is characterized by an agent that learns to achieve a goal through trial and error, receiving rewards or penalties as feedback.

With its unparalleled ability to process and interpret complex data, machine learning continues to revolutionize industries and enhance our everyday lives. Its impact is poised to grow as advancements in computational power and algorithmic sophistication drive further innovation. This transformative technology underscores a shift towards data-centric decision-making, ushering in new possibilities and applications across the spectrum of human endeavor.

Types of Machine Learning

Machine learning, a pivotal aspect of artificial intelligence, can be broadly segmented into three principal types: supervised learning, unsupervised learning, and reinforcement learning. Each type possesses unique characteristics and applications, making it essential to comprehend their distinctions.

Supervised learning is a method where the model is trained on labeled data. In this approach, the algorithm learns from input-output pairs, enabling it to predict the output for new data points accurately. For instance, a supervised learning model can be trained to recognize handwritten digits by learning from a dataset containing images of digits and their corresponding labels. Common algorithms used in supervised learning include linear regression, logistic regression, and support vector machines. This type serves well in applications such as spam detection, image classification, and speech recognition.

Conversely, unsupervised learning doesn’t rely on labeled data for training. Instead, it seeks to identify underlying patterns or structures within the input data. This method helps in clustering, association, and dimensionality reduction tasks. A classic example of unsupervised learning is customer segmentation in marketing using algorithms like K-means clustering and hierarchical clustering, where the aim is to categorize customers based on purchasing behaviors or interests without predefined labels. Other notable applications include anomaly detection, customer segmentation, and market basket analysis.

Reinforcement learning, distinct from the previous two, involves an agent learning through interacting with an environment, optimizing its actions through trial and error to achieve long-term rewards. This method is characterized by its dynamic learning process, where the agent receives feedback in the form of rewards or penalties based on its actions. Reinforcement learning has shown remarkable results in areas like game playing, robotics, and autonomous driving. Algorithms such as Q-learning and deep Q-networks (DQN) are prominent in this domain.

Understanding the distinct characteristics and appropriate use cases of supervised, unsupervised, and reinforcement learning is crucial for effectively leveraging machine learning across various disciplines.

Core Algorithms and Techniques

In the realm of machine learning, several core algorithms serve as the foundation for creating predictive models. Among the most widely recognized are linear regression, decision trees, support vector machines, and neural networks. Each algorithm comes with its unique strengths and is suitable for specific types of tasks.

Linear regression is a straightforward yet powerful algorithm used primarily for predicting numerical values. By establishing a linear relationship between input variables and the output, linear regression models are effective for tasks like house pricing predictions and sales forecasting. Decision trees, on the other hand, are a versatile and interpretable technique that can handle both classification and regression tasks. They recursively partition the data into subsets based on feature values, making them excellent for binary and multi-class classification problems.

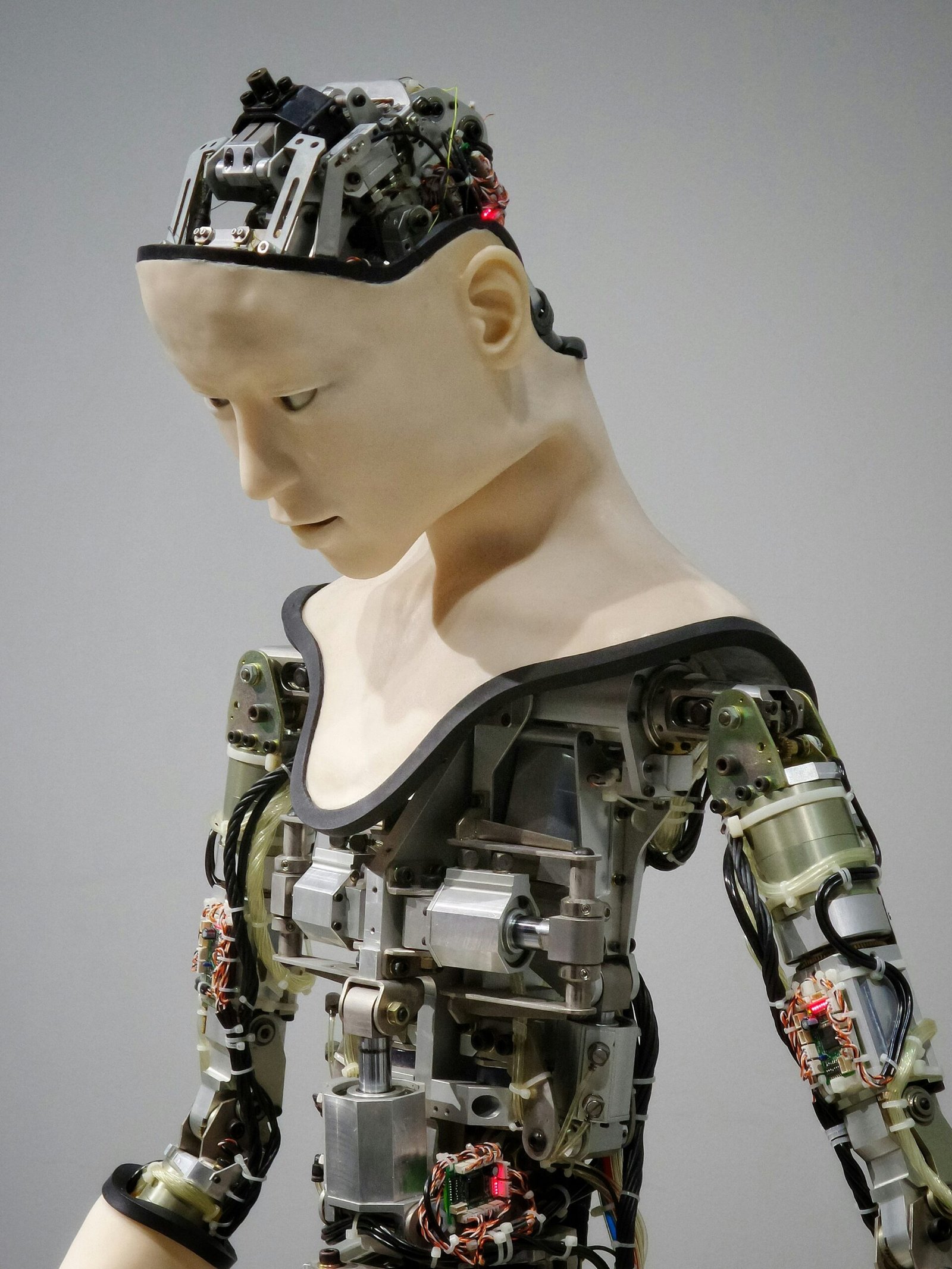

Support vector machines (SVMs) are highly effective for classification tasks, particularly when the data has a clear margin of separation. SVMs work by finding the hyperplane that best divides the dataset into classes. Neural networks, inspired by the structure and function of the human brain, are particularly noted for their performance in tasks involving large and complex datasets, such as image and speech recognition. Deep learning, a subset of neural networks, involves multiple layers of neurons that allow for high-level abstractions in the data.

Critical to the success of these algorithms are techniques like training, validation, and cross-validation. Training involves feeding the model with data to learn from, while validation is used to tune the model’s parameters to avoid overfitting—where the model performs well on training data but poorly on unseen data. Cross-validation further helps to ensure the model’s generalizability by dividing the dataset into multiple folds and rotating the validation set.

Equally important is the process of feature engineering and data preprocessing. Feature engineering involves creating new features from raw data that can help improve model performance. Data preprocessing, which includes normalization, handling missing values, and encoding categorical variables, prepares the dataset for optimal results.

Applications of Machine Learning

Machine learning, a subset of artificial intelligence, is reshaping various industries by automating complex tasks and providing data-driven insights. In healthcare, machine learning algorithms are pivotal for diagnostics and treatment recommendations. For instance, IBM Watson Health employs machine learning to analyze vast datasets, enhancing the accuracy of cancer diagnoses and personalizing treatment plans based on individual patient profiles.

In the finance sector, machine learning is instrumental in fraud detection and stock market predictions. Algorithms can scrutinize transaction patterns to identify fraudulent activities in real time. Firms like JPMorgan Chase utilize machine learning models to predict stock market trends, enabling traders to make informed investment decisions. These predictive capabilities are transforming financial risk management and investment strategies.

Marketing is another field experiencing significant evolution due to machine learning. Businesses leverage machine learning for personalized content delivery and customer segmentation. Amazon’s recommendation engine, for instance, analyzes user behavior to suggest products tailored to individual preferences, thus enhancing customer engagement and satisfaction. Similarly, machine learning models help marketers identify distinct customer segments, allowing for more targeted and effective marketing campaigns.

The automotive industry is making strides with autonomous vehicles, relying heavily on machine learning for navigation and safety. Companies like Tesla employ neural networks to process inputs from various sensors, enabling vehicles to interpret their surroundings accurately and make real-time decisions. This technology is crucial for enhancing the safety and reliability of self-driving cars, advancing the goal of fully autonomous transportation.

These examples underline the transformative impact of machine learning across sectors, driving innovation and efficiency. The continuous advancements and integration of machine learning technologies promise to further expand their applications, heralding a future of enhanced automation and smarter solutions in various domains.

Challenges and Limitations

Machine learning, despite its rapid advancements and widespread applicability, faces several significant challenges and limitations. One of the foremost challenges is related to data quality. High-quality, relevant data is fundamental for building effective machine learning models. However, obtaining, cleaning, and preprocessing such data is often time-consuming and resource-intensive. Furthermore, incomplete, noisy, or biased data can severely degrade the performance of machine learning models, leading to inaccurate or unreliable predictions.

Another critical challenge is model interpretability. Complex machine learning techniques, such as deep learning, often operate as “black boxes,” making it difficult to understand how they arrive at their decisions. This lack of transparency can be problematic, especially in sensitive domains like healthcare and finance, where understanding the reasoning behind a model’s prediction is crucial for trust and accountability.

Bias and fairness are also significant concerns in the field of machine learning. Models trained on biased datasets can perpetuate and even exacerbate existing inequalities. Ensuring that machine learning systems are fair and unbiased requires careful consideration of the training data and the implementation of techniques to mitigate bias. This is an active area of ongoing research, with various approaches being developed to enhance the fairness of machine learning models.

Data privacy is another fundamental issue in machine learning. The use of large datasets often involves sensitive personal information, raising concerns about data security and privacy. Compliance with regulations such as GDPR in Europe and CCPA in California is essential, and researchers are exploring privacy-preserving techniques, such as federated learning and differential privacy, to address these concerns.

Lastly, the need for substantial computational resources can pose a significant hurdle. Training state-of-the-art machine learning models often requires powerful hardware and substantial energy consumption. This can be a limiting factor for many organizations, particularly startups and institutions with limited budgets. Progress in optimizing algorithms and more efficient hardware is crucial to make machine learning more accessible and sustainable.

Tools and Frameworks

Machine learning development has been significantly enhanced by a suite of robust tools and frameworks that simplify and accelerate the process. Among the most noteworthy are TensorFlow, PyTorch, Scikit-learn, and Keras, each offering unique capabilities that cater to various aspects of machine learning.

TensorFlow, developed by Google, is a comprehensive and flexible library well-suited for both research and production. Its extensive community support and rich ecosystem of tools enable developers to build complex neural network architectures with ease. TensorFlow’s versatility is further demonstrated by its integration capabilities with other technologies, such as TensorFlow Lite for mobile deployments and TensorFlow.js for browser-based applications.

PyTorch, an open-source library created by Facebook’s AI Research lab, has gained popularity for its dynamic computation graph and user-friendly interface. Its intuitive design allows for rapid prototyping, making it an excellent choice for research projects. PyTorch is embraced by the academic community and offers extensive documentation and tutorials, making it accessible for beginners.

Scikit-learn is a Python-based library that specializes in traditional machine learning algorithms, such as classification, regression, clustering, and dimensionality reduction. Its straightforward interface and comprehensive documentation make it an ideal starting point for newcomers. Scikit-learn also integrates smoothly with other data science libraries like NumPy and pandas, allowing for a seamless workflow in data preprocessing and analysis.

Keras, originally an independent library but now part of the TensorFlow ecosystem, is designed for rapid development of neural networks. Its high-level interface simplifies the process of building and testing models, making it a favorite among beginners and experts alike. Keras abstracts the complexity of TensorFlow, providing a more user-friendly experience without sacrificing performance.

For those new to machine learning, starting with these frameworks can be straightforward. Extensive online resources, including tutorials, documentation, and community forums, are available to guide beginners through the initial steps of their machine learning journey. By leveraging these powerful tools, developers can efficiently create, test, and deploy machine learning models, paving the way for innovation and discovery.

Future Trends in Machine Learning

As the field of machine learning continues to evolve, several emerging trends are set to define its future landscape. One such trend is the rise of explainable AI. Given the growing reliance on machine learning models, there is an increasing demand for transparency and interpretability in AI-driven decisions. Explainable AI aims to make the internal workings of complex models comprehensible to humans, thus fostering trust and accountability in AI systems.

Another significant development is automated machine learning (AutoML). AutoML is revolutionizing the way machine learning models are created by automating the end-to-end process of model selection, training, and deployment. This democratizes machine learning, enabling professionals with varied expertise levels to build and deploy sophisticated models without in-depth knowledge of the underlying algorithms.

Edge computing is also becoming a pivotal trend in the machine learning arena. By processing data locally at the edge of the network, rather than relying solely on centralized cloud servers, edge computing enhances real-time analytics and reduces latency. This is particularly crucial for applications requiring immediate responses, such as autonomous vehicles and Internet of Things (IoT) devices.

Advancements in hardware are expected to further accelerate the capabilities of machine learning. Quantum computing holds the promise of solving optimization problems exponentially faster than classical computers, opening new possibilities for machine learning algorithms. Additionally, the development of specialized AI chips, such as Tensor Processing Units (TPUs) and Neuromorphic chips, is optimizing processing speeds and energy efficiency, making machine learning more accessible and scalable.

The increased adoption of machine learning also brings potential societal impacts and ethical considerations. Questions regarding data privacy, bias in AI systems, and the future of employment in an AI-driven world necessitate ongoing discussions and regulatory frameworks. Ethical AI development must prioritize fairness, transparency, and accountability to ensure the technology benefits society as a whole.

Getting Started with Machine Learning

Embarking on a journey into the realm of machine learning can be an intellectually rewarding experience. To begin, it is crucial to build a strong foundation in certain core areas such as mathematics, statistics, and programming, particularly in Python, which is the predominant language used in machine learning. Understanding basic concepts in linear algebra, calculus, probability, and statistics will serve as invaluable tools in your machine learning toolbox.

For those starting out, a plethora of educational resources are available to help you gain a comprehensive understanding of machine learning. Online courses from platforms such as Coursera, edX, and Udacity offer structured content and hands-on exercises. Notable courses include Andrew Ng’s Machine Learning course on Coursera and the Deep Learning Specialization, which provide deep insights into fundamental and advanced topics.

Books also serve as excellent resources for in-depth theoretical and practical knowledge. “An Introduction to Statistical Learning” by James, Witten, Hastie, and Tibshirani, and “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron are particularly recommended for beginners. These texts cover essential algorithms and techniques, along with hands-on examples that reinforce learning through practical application.

Hands-on practice is paramount for mastering machine learning. Engaging in personal projects helps consolidate theoretical knowledge through practical application. Platforms such as Kaggle offer an array of datasets and competitions that enable you to test your skills, experiment with different algorithms, and share insights with like-minded individuals. Participation in these communities not only enhances your learning but also helps in building a professional network.

Finally, as you venture deeper into the field, consider contributing to open-source projects and engaging with the broader AI community through forums, webinars, and conferences. Networking with professionals and experts can provide valuable mentorship and open doors to career opportunities. Continuously updating your skills and staying abreast of the latest advancements will ensure sustained growth and success in your machine learning career.

0 Comments